SQL Server 2016 introduced a new capability with AlwaysOn Availability Groups called Automatic Seeding. This allows you to automatically start streaming the backup using VDI across the log stream transport to the secondary replica. With this capability enabled you do not have to manually backup and restore the database and transaction logs before starting data synchronization with the primary replica.

Now there are some prerequisites for using Automatic Seeding such as:

You can enable Automatic Seeding either before or after creating the Availability Group.

Sometimes you will find that Automatic Seeding doesn't work, in our example it will be because a data file already exists with the same name as the database we are seeding. Which is a common problem if you have previously removed the replica from the AG and are trying to re-join it. Unfortunately the UI doesn't give you any indication that seeding failed.

Enter Extended Events.

To demonstrate this I will create an Availability Group across two replicas. I am using the SQL Server AlwaysOn solution available from the Azure Market Place as this has an Availability Group already built, but for this demonstration I will create a new AG and Demo databases.

1. Setup the environment

I have added an additional disk to the SQLSERVER-0 node, and formatted this new volume and assigned the drive letter G:. I also created the path G:\Log and set the file permissions to full control for the sql service account.

2. Create the Demo Database

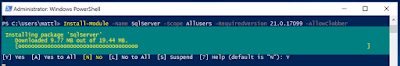

The following script can be used to setup the environment.

[01.CreateDemoDbs.sql]

3. Create the Availability Group

The following script can be used to setup the environment.

[02.CreateAG.sql]

4. Verify Environment

Now you should have a working Availability Group with the AutoseedDb01 synchronized and healthy between two replicas.

5. Create AlwaysOn_AutoseedMonitor Extended Events session

While we enabled the standard AlwaysOn Health extended events session, run the following script to create a new Extended Events session for monitoring Autoseeding

[03.ExtendedEventsSession.sql]

If you want to create this manually or to explore what other events are available you will need to make sure you select the Debug channel when using the Wizard to select events.

You can also filter the category to "dbseed" to further just view the events that relate to auto seeding

6. Add the 2nd database to AG

Now use the following script to add the 2nd database to the AG. NOTE: This DB has the log file on G:\ which does not exist on the replica.

[04.AddDbToAG.sql]

7. Investigating the Auto Seeding

You will notice that the DB was not created on the replica and it is listed in the AG's DBs but with a warning.

If you open the AG Dashboard you will see the warning message as well.

The messages do not include much detail.

8. Using DMVs

We can use the following DMVs to query the status of the seeding.

[05.DMVs.sql]

9. Using Extended Events

We are capturing the "error_reported" event along with the dbseed events. If you view the Event File on the replica where the failure happened. Locate the hadr_physical_seeding_progress event. There will be a lot of these events. In the below screen shot you can see the progress event for one of the last stages of the seeding process where it reports the failure.

Locate the hadr_physical_seeding_progress event with the internal_state_desc of "WaitingForBackupToStartSending". This is an early step in the auto seed process, then you should see error_reported events. Here is where you can find the real errors. Most likely it will be the first one reported. In our case it is:

Directory lookup for the file "G:\LOG\AutoseedDb02_log.ldf" failed with the operating system error 3(The system cannot find the path specified.).

There will be many more cases where this is useful. I have also used it before when I had old data or log files on the server from previous versions of a database I was adding back into the AG as sometimes that operation will fail (depending on permissions).

Let me know what situations you find

All the scripts used in this post are located in my GitHub https://github.com/Matticusau/SQLDemos/tree/master/SQLAlwaysOnAGAutoSeeding

Reference

Automatic seeding for secondary replicas

https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/automatic-seeding-secondary-replicas

Automatically initialize Always On Availability group

https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/automatically-initialize-always-on-availability-group

Legal Stuff: The contents of this blog is provided “as-is”. The information, opinions and views expressed are those of the author and do not necessarily state or reflect those of any other company with affiliation to the products discussed. This includes any URLs or Tools. The author does not accept any responsibility from the use of the information or tools mentioned within this blog, and recommends adequate evaluation against your own requirements to measure suitability.

Now there are some prerequisites for using Automatic Seeding such as:

- Data and Log file paths must be the same on all Replicas

- Databases must be in Full recovery model and at least one Full backup must have been taken of the database (to start the recovery chain)

- All other prerequisites for a database to be added to an Availability Group must also be met

You can enable Automatic Seeding either before or after creating the Availability Group.

Sometimes you will find that Automatic Seeding doesn't work, in our example it will be because a data file already exists with the same name as the database we are seeding. Which is a common problem if you have previously removed the replica from the AG and are trying to re-join it. Unfortunately the UI doesn't give you any indication that seeding failed.

Enter Extended Events.

To demonstrate this I will create an Availability Group across two replicas. I am using the SQL Server AlwaysOn solution available from the Azure Market Place as this has an Availability Group already built, but for this demonstration I will create a new AG and Demo databases.

1. Setup the environment

I have added an additional disk to the SQLSERVER-0 node, and formatted this new volume and assigned the drive letter G:. I also created the path G:\Log and set the file permissions to full control for the sql service account.

2. Create the Demo Database

The following script can be used to setup the environment.

[01.CreateDemoDbs.sql]

3. Create the Availability Group

The following script can be used to setup the environment.

[02.CreateAG.sql]

4. Verify Environment

Now you should have a working Availability Group with the AutoseedDb01 synchronized and healthy between two replicas.

5. Create AlwaysOn_AutoseedMonitor Extended Events session

While we enabled the standard AlwaysOn Health extended events session, run the following script to create a new Extended Events session for monitoring Autoseeding

[03.ExtendedEventsSession.sql]

If you want to create this manually or to explore what other events are available you will need to make sure you select the Debug channel when using the Wizard to select events.

You can also filter the category to "dbseed" to further just view the events that relate to auto seeding

6. Add the 2nd database to AG

Now use the following script to add the 2nd database to the AG. NOTE: This DB has the log file on G:\ which does not exist on the replica.

[04.AddDbToAG.sql]

7. Investigating the Auto Seeding

You will notice that the DB was not created on the replica and it is listed in the AG's DBs but with a warning.

If you open the AG Dashboard you will see the warning message as well.

The messages do not include much detail.

8. Using DMVs

We can use the following DMVs to query the status of the seeding.

[05.DMVs.sql]

9. Using Extended Events

We are capturing the "error_reported" event along with the dbseed events. If you view the Event File on the replica where the failure happened. Locate the hadr_physical_seeding_progress event. There will be a lot of these events. In the below screen shot you can see the progress event for one of the last stages of the seeding process where it reports the failure.

Locate the hadr_physical_seeding_progress event with the internal_state_desc of "WaitingForBackupToStartSending". This is an early step in the auto seed process, then you should see error_reported events. Here is where you can find the real errors. Most likely it will be the first one reported. In our case it is:

Directory lookup for the file "G:\LOG\AutoseedDb02_log.ldf" failed with the operating system error 3(The system cannot find the path specified.).

There will be many more cases where this is useful. I have also used it before when I had old data or log files on the server from previous versions of a database I was adding back into the AG as sometimes that operation will fail (depending on permissions).

Let me know what situations you find

All the scripts used in this post are located in my GitHub https://github.com/Matticusau/SQLDemos/tree/master/SQLAlwaysOnAGAutoSeeding

Reference

Automatic seeding for secondary replicas

https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/automatic-seeding-secondary-replicas

Automatically initialize Always On Availability group

https://docs.microsoft.com/en-us/sql/database-engine/availability-groups/windows/automatically-initialize-always-on-availability-group

Legal Stuff: The contents of this blog is provided “as-is”. The information, opinions and views expressed are those of the author and do not necessarily state or reflect those of any other company with affiliation to the products discussed. This includes any URLs or Tools. The author does not accept any responsibility from the use of the information or tools mentioned within this blog, and recommends adequate evaluation against your own requirements to measure suitability.