StretchDB is a new capability in SQL Server 2016 which provides the ability to effectively partition your data between warm and cold data, and more importantly allow SQL Server to automatically host that data in an Azure SQL Database.... sounds great in concept and in cases where you have a large amount of cold data which just needs to be queried and not updated (e.g. archived data for reporting).

Now, there is one critically thing you really need to be aware of and that is when it comes time to Restore or more specifically Migrate the on-premises database that is stretched. Let's take this scenario for example.

You are planning to upgrade the production SQL Server to SQL Server 2016 SP1. The business needs do not allow for an in-place upgrade, so you have built a new server and are planning to migrate production database to the new server.

It could be a DR scenario but more likely the issues I am talking about will be during migrations.

The issue you need to be aware of is that after restoring the on-premises database you then need to reauthorize the connection to the Azure SQL Database (official details at https://msdn.microsoft.com/en-us/library/mt733205.aspx). When you perform this step, you have to specify the parameter WITH_COPY which can either point the database to the existing remote table in the Azure SQL Database, or create a new copy of the remote table to use. The recommended approach is to use a new copy of the remote table (with_copy = 1). I would also recommend this approach for database migrations, and then manually clean up the old table. The reason I recommend this is as I will show in this post, if your old/current database is still in use and you point a new database to the same remote table, you can experience conflicts between the data reconciliations and this will result in cold data loss.

So let's explore the issue.

Firstly here is the environment I am working in:

On-premises SQL Server 2016 SP1 (13.0.4001.0). I have one database StretchDBDemo which contains the following tables:

The full schema is available in the script at the end of this post.

We are going to use the dbo.People table to demonstrate this issue. So lets start with some data.

Now the fun part starts. Lets simulate a database migration. In my lab I am just using the same server, but the experience is the same.

Get the complete demo scripts here.

Now, there is one critically thing you really need to be aware of and that is when it comes time to Restore or more specifically Migrate the on-premises database that is stretched. Let's take this scenario for example.

You are planning to upgrade the production SQL Server to SQL Server 2016 SP1. The business needs do not allow for an in-place upgrade, so you have built a new server and are planning to migrate production database to the new server.

It could be a DR scenario but more likely the issues I am talking about will be during migrations.

The issue you need to be aware of is that after restoring the on-premises database you then need to reauthorize the connection to the Azure SQL Database (official details at https://msdn.microsoft.com/en-us/library/mt733205.aspx). When you perform this step, you have to specify the parameter WITH_COPY which can either point the database to the existing remote table in the Azure SQL Database, or create a new copy of the remote table to use. The recommended approach is to use a new copy of the remote table (with_copy = 1). I would also recommend this approach for database migrations, and then manually clean up the old table. The reason I recommend this is as I will show in this post, if your old/current database is still in use and you point a new database to the same remote table, you can experience conflicts between the data reconciliations and this will result in cold data loss.

So let's explore the issue.

Firstly here is the environment I am working in:

On-premises SQL Server 2016 SP1 (13.0.4001.0). I have one database StretchDBDemo which contains the following tables:

- dbo.Department

- DepartmentID, DepartmentName, OfficeLoc

- dbo.People

- PersonID, FullName, IsEmployee, PhoneNumber, EmailAddress

The full schema is available in the script at the end of this post.

We are going to use the dbo.People table to demonstrate this issue. So lets start with some data.

INSERT INTO [dbo].[People] ([PersonID], [FullName], [IsEmployee], [PhoneNumber], [EmailAddress]) VALUES (1, 'Matt', 1, '0','yourname@email.com'); INSERT INTO [dbo].[People] ([PersonID], [FullName], [IsEmployee], [PhoneNumber], [EmailAddress]) VALUES (2, 'Steve', 0, '0','yourname@email.com');

Next step is to setup StretchDB feature. This has to be done through the GUI and is pretty straight forward but here are the steps I have used:

- Right click on the Database StretchDBDemo in Object Explorer

- Select Tasks > Stretch > Enable

- In the GUI select Next

- Place a tick in the box next to the dbo.People table. Notice the warning icon. Important to note as it does have some relevance to the behaviour we will explore.

- Click the "Entire Table" link under the Migrate column to launch the filter wizard

- Setup a filter called IsEmployee False, with the predicate "IsEmployee = 0". Click the Check button and then Done.

NOTE: If you don't have any rows in the dbo.People table that match this predicate you won't be able to proceed past this point. - Click Next and then authenticate to Azure.

- Select either an existing Azure SQL Database Server or create a new StretchDB server.

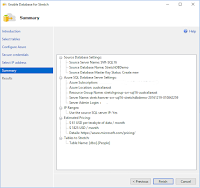

- Follow the wizard to completion. I recommend taking a screen snippet of the summary page for reference.

- You can then check that the StretchDb feature is working by using the Monitor from the Stretch menu on the Database object.

You could also query the following tables to check this data manuallySELECT * FROM sys.dm_db_rda_migration_status SELECT * FROM sys.dm_db_rda_schema_update_status

- You will need to check the configuration of the StretchDb, this is critical as we need the Azure SQL Server address.

-- the db configSELECT * FROM sys.remote_data_archive_databases -- the tables config SELECT * FROM sys.remote_data_archive_tables -- the data source SELECT * FROM sys.external_data_sources

- Now that you have the remote server's address, in SSMS Object Explorer connect to the Azure SQL Database server

- Expand the Databases branch in Object Explorer, expand your stretched remote database (the name is in the tables queried above). Then expand Tables. Note how no tables are displayed here. Any stretched tables are deliberately hidden and you should not query those tables in general practice. However we are troubleshooting/demonstrating an issue so we will query the tables directly.

- Query sys.Tables to find the name of the stretched table

- Now query the stretched Table in the Azure SQL Database. You should have 1 record for 'Steve'.

- Lets add a new record to our on-premises database that will be stretched to the remote server.

INSERT INTO [dbo].[People] ([PersonID], [FullName], [IsEmployee], [PhoneNumber], [EmailAddress]) VALUES (3, 'Chris', 0, '0','yourname@email.com');

- Using the Monitor or the TSQL queries from earlier check on the status of the StretchDb feature. After some time query the stretched table in the Azure SQL Database again to make sure the new record is stretched there.

- Take a backup of the on-premises StretchDbDemo database. You could elect to disable StretchDb first and bring all the data back on-premises, but we trust the Azure backups to keep our data in the cloud safe.

- Next restore the backup to the new database we are migrating the application to. I have used the database name StretchDbDemoNew.

- Once the database is restored it isn't immediately Stretching database as it needs to be re-authorized to use the remote server. This is outlined in https://msdn.microsoft.com/en-us/library/mt733205.aspx.

- First get the name of the credential that exists. If you were migrating this to another server you will need to recreate the credential on that server. The name of the credential needs to match the remote server address.

SELECT * FROM sys.database_scoped_credentials

- Now use the following statement to

-- reauthorize using the existing credential information USE [StretchDBDemoNew]; GO EXEC sp_rda_reauthorize_db @credential = N'

', @with_copy = 0; GO

Here is where the problem begins.

When you execute sp_rda_reauthorize_db and specify with_copy = 0, it uses the existing Azure SQL Database as the endpoint. So now we actually have two on-premises databases StretchDBDemo and StretchDBDemoNew, both pointing to the same Azure SQL Database table for stretched data. If you specify with_copy = 1, it actually creates a copy of the data in a new table and therefore the old and new on-premises databases have different stretch data end-points.

So why does this matter. Well lets add some data to the new on-premises database because well we want to make sure it's all work as you would in any migration.

INSERT INTO [StretchDBDemoNew].[dbo].[People] ([PersonID], [FullName], [IsEmployee], [PhoneNumber], [EmailAddress]) VALUES (10, 'Bill', 0, '0','yourname@email.com');

HINT: Any rows I insert into the new database I will use a PersonID >= 10 as this helps with the demonstration.

Now this should get stretched to the Azure SQL Database. So switch to that query window and check it's there.

NOTE: it might take a few minutes for the reconciliation to occur.

Here is where things get interesting. After a few minutes try querying the data again and see if there is any change. While writing this post the remote record disappeared, however when writing the scripts it didn't. So what is happening..... to find out lets compare local and remote data more.

With the new record still in Azure, query the old on-premises database. If your lucky you will find the record inserted into the new database which has been stretched to Azure also returned when you query the original database. Your experience here may differ as it's all a matter of timing.

Just to add fuel to the fire I inserted another record into my new database that would be stretched to Azure.

INSERT INTO [StretchDBDemoNew].[dbo].[People] ([PersonID], [FullName], [IsEmployee], [PhoneNumber], [EmailAddress]) VALUES (11, 'Bart', 0, '0','yourname@email.com');

But what about our existing on-premises database. Remember in my scenario we are only testing the migration so you would assume there are still active connections querying that database and potentially data being updated which would then stretch into Azure. So lets insert two records into that database which will be stretch.

INSERT INTO [StretchDBDemo].[dbo].[People] ([PersonID], [FullName], [IsEmployee], [PhoneNumber], [EmailAddress]) VALUES (4, 'Chad', 0, '0','yourname@email.com'); INSERT INTO [StretchDBDemo].[dbo].[People] ([PersonID], [FullName], [IsEmployee], [PhoneNumber], [EmailAddress]) VALUES (5, 'Dan', 0, '0','yourname@email.com');

So in our existing database we now have this data being returned

What about in our new database.

Wait a minute, those records 'Chad' and 'Dan' were just inserted into our existing database, and where did our records for 'Bill' and 'Bart' go.

Here is the issue, with both on-premises databases pointing to the same remote database through the with_copy = 0 option, it means that the reconciliation processes in the databases will conflict. One of the databases will effectively become the master and overwrite what records the other sends. As I mentioned both times I ran through the scripts I got different experiences where one time the new database was the master, and the other time (writing this blog) the original database was the master and overwrote the records.

So, the good news is that if you use with_copy = 1 option then the databases use separate remote tables and therefore do not conflict. So my recommendation is ALWAYS USE "WITH_COPY = 1" when reauthorizing a database to the remote server.

In saying that a few things to keep in mind, over time if you move the database a lot you could end up with lots of tables in the remote database so best to monitor that if you want to keep your costs down.

Also if at any stage you are querying the stretched tables in the on-premises databases and you have this conflict situation you could experience a number of cases of this error.

I hope this blog post helps when it comes time to planning your migrations. Any questions or feedback please leave them in the comments section.

Get the complete demo scripts here.

Legal Stuff: The contents of this blog is provided “as-is”. The information, opinions and views expressed are those of the author and do not necessarily state or reflect those of any other company with affiliation to the products discussed. This includes any URLs or Tools. The author does not accept any responsibility from the use of the information or tools mentioned within this blog, and recommends adequate evaluation against your own requirements to measure suitability.